As artificial intelligence continues to transform the way we communicate, it is essential to understand the underlying mechanisms at work. One such mechanism is tokenization, used by OpenAI in processing text data.

In this blog post, we will explore what tokens are and how to interpret their value when working with OpenAI’s GPT models.

Tokens in OpenAI Language Models

Tokens are fundamental units that help language models process text more efficiently. In OpenAI’s GPT models, tokens can be considered as pieces of words, which may include trailing spaces or even sub-words. The tokenization process depends on the language, and different languages may have a higher or lower token-to-character ratio.

To put things into perspective, here’s an approximate breakdown of tokens for the English language:

- 1 token ≈ 4 characters

- 1 token ≈ ¾ words

- 100 tokens ≈ 75 words

Hence, 1,000 tokens would roughly translate to 750 words in the context of OpenAI.

How Tokens Impact Usage and Pricing

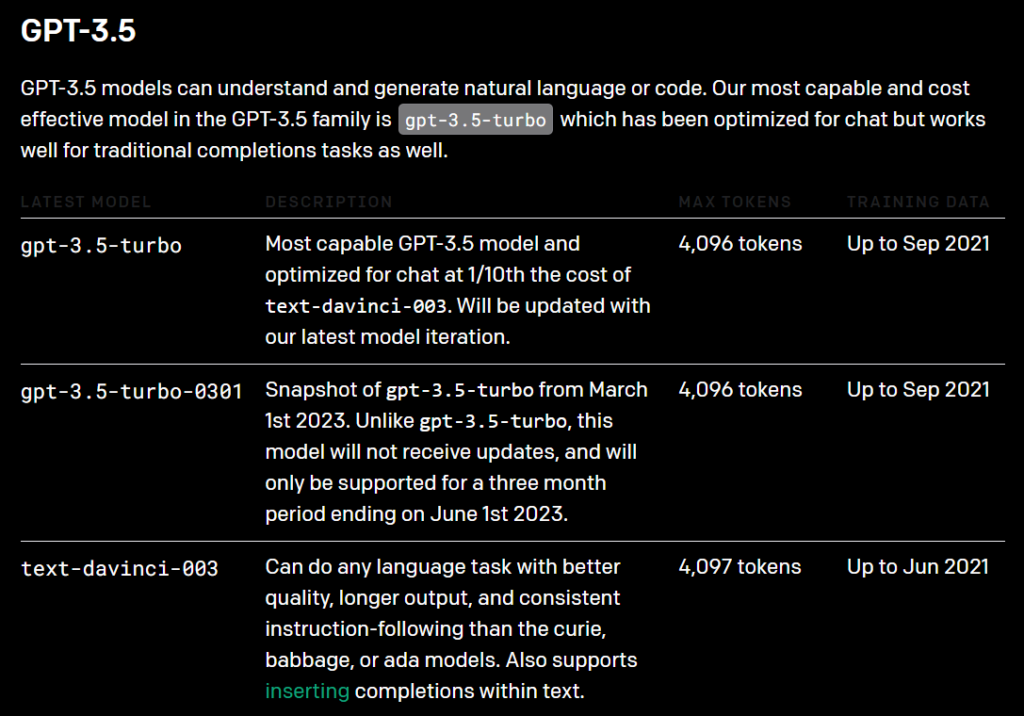

In applications that harness OpenAI’s APIs, tokens are crucial for determining usage limits, response sizes, and the cost of requests to different GPT models. Each model type, such as davinci, ada, and others, has their token limits, response times, and pricing structures based on their capabilities.

For example, using the GPT-3.5 model, the maximum token limit is 4096. A request’s tokens are shared between the input prompt and the generated output, so if your prompt consists of 4000 tokens, your output completion can contain only 96 tokens. Users must take this into account when working with long prompts or large-scale text generation projects.

The new GPT-4 Turbo model has a token limit of 128,000, which is the highest as of now.

Token pricing for API requests also depends on the particular model being used, and the number of tokens involved in the request. More comprehensive details on token pricing can be found on OpenAI’s Pricing page.

Understanding the Value of 1,000 OpenAI Tokens

To summarize, OpenAI’s tokenization system is a crucial aspect of processing text data when working with GPT models.

Given that 1,000 tokens essentially translate to 750 words, developers need to be aware of token limitations, language dependencies, and pricing implications when building applications leveraging the OpenAI API.

You can use the OpenAI pricing estimator to estimate how much it will cost to use the OpenAI API. It gives you an estimated price based on a certain number of words.